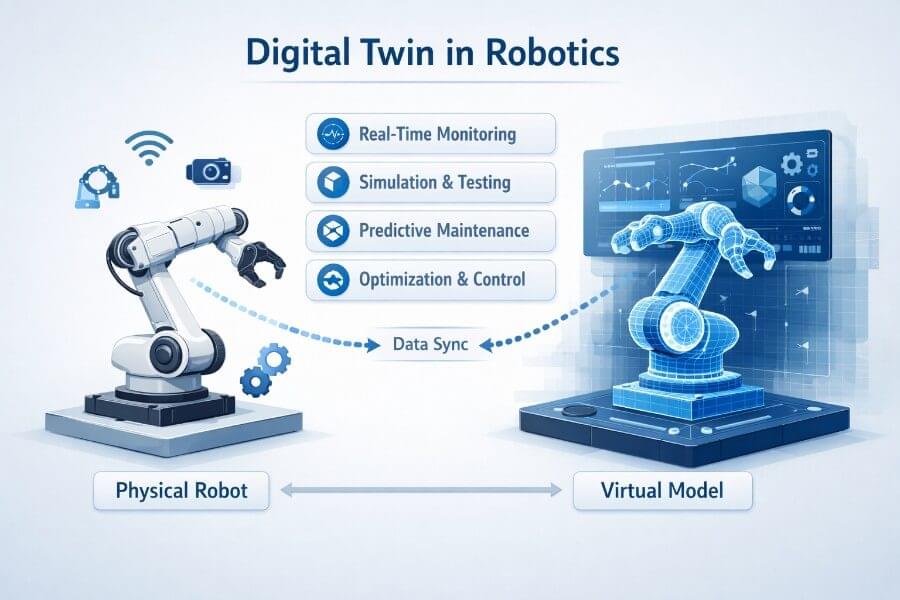

The term digital twin in robotics is one of the most overused — and misunderstood — concepts in modern engineering. Digital twins are used to create dynamic digital replicas of physical products and their physical counterparts, not just in robotics but also in construction, manufacturing, and other industries.