A practical journey from high latency to usable real-time speech-to-text

Real-time speech-to-text technology is transforming the way humans interact with machines, especially in robotics and automation. This technology relies on processing audio streams in real time, making it a key enabler of automation in robotics by allowing seamless communication and control. The integration of artificial intelligence in robotics further enhances the autonomy and capabilities of robots, making real-time speech-to-text a critical feature for advanced robotic systems.

The global market value of industrial robot installations has reached an all-time high of US$ 16.7 billion, reflecting the growing adoption of robotics across industries. Additionally, the field of humanoid robotics is expanding rapidly, particularly in environments designed for humans, underscoring the increasing relevance of real-time speech-to-text solutions.

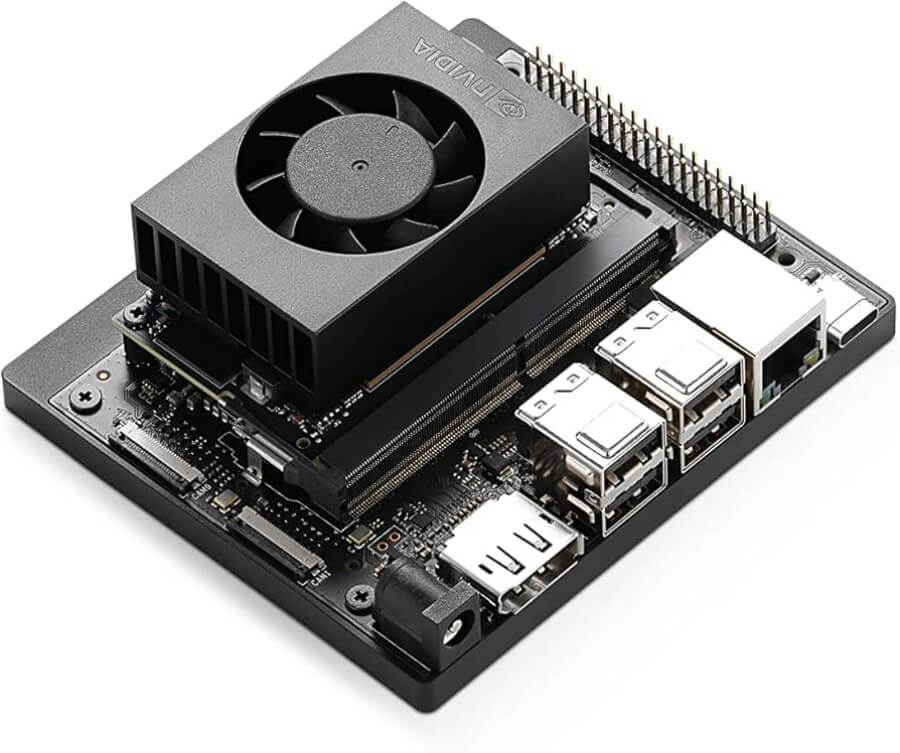

1. Why the Jetson Orin Nano Super?

The NVIDIA Jetson Orin Nano Super is a compact edge-AI development kit designed for robotics, embedded AI, and real-time perception workloads. The Nvidia Jetson Orin Nano Super is part of the nano super developer kit, and using the latest software is essential for optimal performance and compatibility.

- Advanced technology and interdisciplinary engineering—including mechanical, electrical, and materials engineering—play a key role in the design and capabilities of the Jetson Orin Nano Super for robotics applications.

The integration of operational technology and real-time data exchange with devices is crucial for enabling automation and robotics across industries.

Demand for versatile robots is accelerating due to the convergence of Information Technology (IT) and Operational Technology (OT).

Key characteristics (why it matters for STT)

CPU: 6-core ARM Cortex-A78AE

GPU: NVIDIA Ampere architecture (up to ~1024 CUDA cores)

AI performance: up to ~40 TOPS (INT8, depending on configuration)

Memory: 8 GB unified LPDDR5

Power envelope: ~7–15 W

Power supply: Requires an efficient and reliable power supply to ensure stable operation in robotics applications

This makes the Orin Nano Super extremely attractive for on-device speech-to-text (STT):

No cloud dependency

Low latency potential

Tight integration with robotics stacks (ROS 2, GPIO, sensors)

However, getting usable real-time STT is not automatic. The rest of this article walks through the trial-and-error path we took to reach a practical solution.

2. First attempt: Whisper on CPU (Tensor / PyTorch)

What is Whisper?

Whisper is an advanced open-source speech recognition system developed by OpenAI. It leverages deep learning models trained on a vast amount of multilingual and multitask supervised data, enabling it to transcribe spoken language into text with high accuracy across many languages and accents. Whisper is designed to be robust to background noise and varied audio quality, making it particularly suitable for real-time speech-to-text applications in dynamic environments like robotics. Its versatility and performance have made it a popular choice for developers seeking reliable, on-device speech recognition without relying on cloud services.

The obvious first step was to run Whisper using CPU inference, either:

via PyTorch

or via Tensor abstractions on ARM

Various techniques are used in real time speech to text systems, including capturing sound waves with microphones and converting them into digital data for processing and recognition.

What worked

Transcription quality was correct

Setup was relatively simple

This initial approach also provided valuable research insights into the performance of CPU-based speech-to-text systems.

What failed

Massive latency

On the Orin Nano Super, long utterances resulted in 20–30 seconds delay

CPU usage went to 100%, starving the rest of the system

Example symptoms

User finishes speaking … 10s … … 20s … Transcription finally appears

This approach is not viable for:

conversational robots

interactive assistants

interruption-based dialogue systems

Conclusion: CPU-only Whisper on Orin Nano is fine for offline batch transcription, not for interaction.

3. Second attempt: NVIDIA Riva

Next, we evaluated NVIDIA Riva, NVIDIA’s official speech AI stack.

NVIDIA Riva provides extensive resources, including comprehensive documentation, tutorials, and community support, to help developers implement real time speech to text solutions.

On paper, Riva is ideal

GPU-accelerated ASR

Optimized for NVIDIA hardware

Production-grade models

Riva’s platform is also designed to support generative AI workloads, enabling advanced speech and language applications.

In practice, on Jetson

Heavy container stack

Large memory footprint

Complex configuration (Triton, gRPC, model repos)

Overkill for a single embedded robot

Practical issues encountered

Long setup time

Difficult debugging

Tight coupling with NVIDIA’s ecosystem

Hard to customize for experimental pipelines

Conclusion:

Riva is powerful, but too complex and heavyweight for an embedded robotics use case where you want:

transparency

control

fast iteration

4. The turning point: whisper.cpp with CUDA

The real breakthrough came from whisper.cpp.

Additionally, whisper.cpp can be integrated with robot controllers to enable speech-driven control in robotics applications.

Why whisper.cpp?

Written in C/C++

Minimal dependencies

Designed for performance

Supports CUDA acceleration

Excellent fit for embedded systems

The performance benefits of whisper.cpp with CUDA are a result of the deeply intertwined relationship between software optimization and hardware acceleration.

Unlike Python-based stacks, whisper.cpp gives you:

deterministic behavior

low overhead

full control over threading and memory

5. Compiling whisper.cpp with CUDA on Jetson

Prerequisites

JetPack installed (CUDA, cuBLAS available)

Enough swap space (important!)

Native build on the Jetson

Clone the repository

git clone https://github.com/ggerganov/whisper.cpp.git cd whisper.cpp

Configure the build

cmake -B build \\

-DGGML_CUDA=ON \\

-DCMAKE_BUILD_TYPE=Release

Compile

cmake --build build -- -j2

⚠️ Tip:

On Orin Nano, avoid -j6. Memory pressure can kill the build.

-j2 is slow but stable.

Successful build output (excerpt)

[ 96%] Built target whisper-cli [ 99%] Built target vad-speech-segments [100%] Built target whisper-server

At this point, CUDA support is enabled and verified.

6. Running Whisper with GPU acceleration

Download a model

./build/bin/whisper-cli \

-m models/ggml-base.en.bin \

-f samples/jfk.wav \

--gpu

Basic GPU-accelerated transcription

./build/bin/whisper-cli \\

-m models/ggml-base.en.bin \\

-f samples/jfk.wav \\ --gpu

Observed improvements

GPU usage immediately visible (tegrastats)

CPU usage drops significantly

Latency reduced from tens of seconds to a few seconds

Much more stable under continuous usage

Low-Latency Speech-to-Text with whisper-server and Python

Compiling whisper.cpp with CUDA is only half of the story.

To actually achieve low-latency speech-to-text on Jetson, the recommended architecture is to run whisper-server as a persistent process and stream short audio chunks to it from a lightweight Python client.

This avoids:

model reload on every transcription

Python ↔ GPU overhead

unnecessary memory allocations

High-level architecture

Microphone → Python (audio capture)

→ HTTP request

→ whisper-server (CUDA)

→ Transcription result

1. Installing Python dependencies

On Jetson (Ubuntu 20.04 / 22.04), start by installing system dependencies:

sudo apt update sudo apt install -y \

python3 \

python3-pip \

portaudio19-dev \

ffmpeg

Then install Python packages:

pip3 install --user \

sounddevice \

numpy \

requests

These libraries are intentionally minimal:

sounddevice: real-time microphone capturenumpy: audio bufferingrequests: HTTP communication withwhisper-server

2. Starting whisper-server with CUDA

From the whisper.cpp directory:

./build/bin/whisper-server \

-m models/ggml-base.en.bin \

--gpu \

--port 8080

At this point:

the model is loaded once

CUDA kernels stay warm

the server is ready to accept audio chunks

3. Python client script (low latency)

Below is a minimal Python script that:

records short audio chunks

sends them to

whisper-serverprints the transcription result

#!/usr/bin/env python3

"""

Low-latency Whisper client for whisper-server.

This script:

- captures short audio chunks from the microphone

- sends them to whisper-server via HTTP

- prints the transcription result

It is designed to keep latency low by:

- using small audio buffers

- avoiding any model loading in Python

"""

import sounddevice as sd

import numpy as np

import requests

import tempfile

import subprocess

import os

# ----------------------------

# Audio configuration

# ----------------------------

SAMPLE_RATE = 16000 # Whisper expects 16 kHz audio

CHANNELS = 1 # Mono audio

RECORD_SECONDS = 3 # Short chunk for low latency

# whisper-server endpoint

WHISPER_SERVER_URL = "http://127.0.0.1:8080/inference"

def record_audio():

"""

Records audio from the default microphone and returns

a NumPy float32 array.

"""

print("Recording...")

audio = sd.rec(

int(RECORD_SECONDS * SAMPLE_RATE),

samplerate=SAMPLE_RATE,

channels=CHANNELS,

dtype="float32"

)

sd.wait()

print("Recording finished")

return audio.squeeze()

def save_wav(audio, path):

"""

Saves raw float32 audio to a WAV file using ffmpeg.

This avoids Python WAV encoding overhead.

"""

subprocess.run(

[

"ffmpeg",

"-y",

"-f", "f32le",

"-ar", str(SAMPLE_RATE),

"-ac", str(CHANNELS),

"-i", "pipe:0",

path

],

input=audio.tobytes(),

stdout=subprocess.DEVNULL,

stderr=subprocess.DEVNULL

)

def transcribe(audio):

"""

Sends audio to whisper-server and returns the transcription text.

"""

with tempfile.NamedTemporaryFile(suffix=".wav", delete=False) as tmp:

wav_path = tmp.name

try:

save_wav(audio, wav_path)

with open(wav_path, "rb") as f:

response = requests.post(

WHISPER_SERVER_URL,

files={"file": f}

)

response.raise_for_status()

return response.json()["text"]

finally:

os.remove(wav_path)

def main():

"""

Main loop:

- record

- transcribe

- print result

"""

while True:

audio = record_audio()

text = transcribe(audio)

print(f"> {text}")

if __name__ == "__main__":

main()

4. Why this approach keeps latency low

This design works well on Jetson because:

whisper-serverkeeps the model resident in GPU memoryPython only handles audio I/O

Short chunks reduce end-to-end delay

CUDA execution stays saturated without blocking the CPU

In practice, this setup delivers:

seconds-level latency instead of tens of seconds

stable performance even under continuous speech

a clean separation between audio capture and inference

In the next article, this Python client will be extended with:

voice activity handling

interruption support

wake word logic

But even in its current form, this setup is already usable for real embedded interaction on Jetson.

7. AI Performance Optimization for Real-Time STT on Jetson

The integration of artificial intelligence into cyber physical systems has fundamentally changed how robots interact with the physical environment and with humans. In modern robotic systems—especially those designed for human interaction, such as humanoid robots—real-time Speech-To-Text (STT) is a cornerstone capability. It enables robots to understand spoken commands, respond naturally, and adapt to dynamic physical processes, making them more effective collaborators in environments ranging from smart factories to healthcare and civil infrastructure.

Optimizing AI performance for real-time STT on platforms like the Jetson Orin Nano Super is essential for bridging the digital and physical worlds. The key features of cyber physical systems—tight integration of computational elements with physical processes and control systems—allow robots to process sensor data, execute control algorithms, and interact with humans in real time. This seamless integration is what enables advanced speech recognition to become a reliable part of the robot’s control and decision-making loop.

To achieve high-performance, real-time STT, it’s crucial to balance the computational demands of AI models with the constraints of embedded systems. On Jetson, leveraging GPU acceleration and efficient software components like whisper.cpp ensures that speech recognition operates with low latency and high reliability, even as the robot manages other essential tasks such as path planning, sensor fusion, and process control. Careful resource allocation, model selection, and system-level integration are key strategies for maintaining both AI performance and overall system efficiency.

Ultimately, optimizing AI for real-time speech recognition is not just about speed—it’s about enabling robots and engineered systems to interact intelligently and safely with the physical world. As CPS technologies continue to evolve, the ability to deliver responsive, accurate, and robust speech-driven control will remain a key differentiator for next-generation robotic systems.

7. Why this approach works on Jetson

| Aspect | CPU Whisper | Riva | whisper.cpp + CUDA |

|---|---|---|---|

| Latency | ❌ High | ✅ Low | ✅ Low |

| Complexity | ✅ Low | ❌ High | ✅ Moderate |

| Control | ❌ Limited | ❌ Limited | ✅ Full |

| Embedded-friendly | ❌ | ❌ | ✅ |

| Debuggability | ❌ | ❌ | ✅ |

whisper.cpp with CUDA hits the sweet spot:

fast enough

simple enough

transparent enough

8. Key takeaways

The Jetson Orin Nano Super can handle real-time STT

CPU-only Whisper is not suitable for interaction

NVIDIA Riva is powerful but too heavy for many robotics projects

whisper.cpp compiled locally with CUDA is the most practical solution

This setup becomes a solid foundation for:

conversational robots

offline voice assistants

speech-driven control systems

adoption of real-time speech-to-text technology across various industries, including the automotive industry, to enhance flexibility, safety, and efficiency

the growing use of collaborative robots, with industry standards for safety and security being crucial for effective human-robot collaboration

implementing robotics and automation as a key strategy for employers to address labor shortages and improve workplace efficiency

deploying robots that use artificial intelligence to work independently, with a focus on ensuring safety and security as robots increasingly operate alongside humans

enabling new forms of human-robot interaction through the integration of AI in robotics, transforming how robots interact with their environments and with humans

What’s next?

In the next article, we will build on this foundation and cover:

voice activity handling

interruption-friendly dialogue

wake word integration

Future articles will focus on the broader CPS program, including the integration of other devices such as sensors and actuators. CPS integrates computational elements with physical processes to enable intelligent control, enabling interaction between digital and physical components and driving advancements in various sectors. CPS operates in real time, responds to changes in the physical environment with minimal delay, and relies on data collected from sensors to make informed decisions. In smart factories, AI-driven robotics can autonomously anticipate failures before they occur, and the convergence of Information Technology (IT) and Operational Technology (OT) enhances robotics versatility.

But that’s a story for another deep dive.

If you are building robotics or CPS systems on Jetson, mastering low-level tools like whisper.cpp is often the difference between a demo and a deployable system.